What is PrivateGPT?

Background

Generative AI, such as OpenAI’s ChatGPT, is a powerful tool that streamlines a number of tasks such as writing emails, reviewing reports and documents, and much more. However, these benefits are a double-edged sword. Generative AI has raised huge data privacy concerns, leading most enterprises to block ChatGPT internally.

This is for good reason. ChatGPT was temporarily banned in Italy and has already had its first data leak, which exposed personal information including some credit card details.

Enterprises also don’t want their data retained for model improvement or performance monitoring. This is because these systems can learn and regurgitate PII that was included in the training data, like this Korean lovebot started doing, leading to the unintentional disclosure of personal information.

How It Works

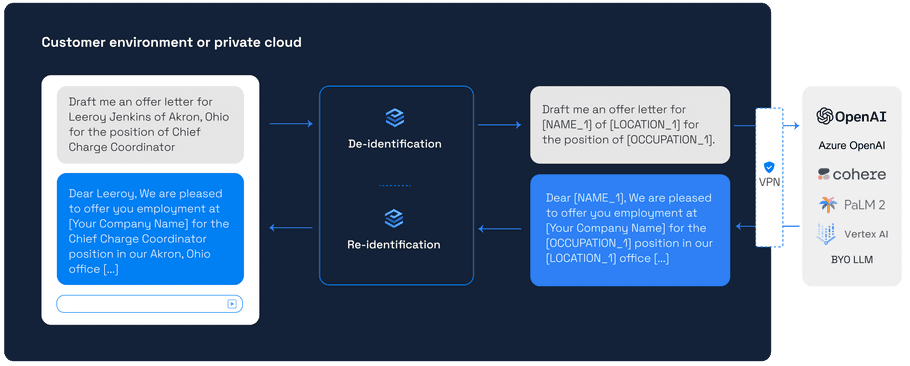

PrivateGPT aims to offer the same experience as ChatGPT and the OpenAI API, whilst mitigating the privacy concerns. It works by using Private AI's user-hosted PII identification and redaction container to identify PII and redact prompts before they are sent to Microsoft's OpenAI service. For example, if the original prompt is Invite Mr Jones for an interview on the 25th May, then this is what is sent to ChatGPT: Invite [NAME_1] for an interview on the [DATE_1].

OpenAI is capable of working with redacted prompts, and includes the redaction markers in the response. For example, the completion for the above prompt is Please join us for an interview with [NAME_1] on [DATE_1]. Once the completion is received, PrivateGPT replaces the redaction markers with the original PII, leading to the final output the user sees: Invite Mr Jones for an interview on the 25th May.

This works surprisingly well, as PII is often not necessary to generate the completion and ChatGPT is capable of working with redacted prompts. In the above example, neither the name or the date are relevant for generating a response. In fact, it can even be preferable to remove personal identifiers as this can help reduce the scope for bias in ChatGPT's completions. The end result is similar, but without personally identifiable information such as addresses, phone numbers, and credit card details being shared outside an organization's compute systems, to cloud-hosted LLMs like those provided by OpenAI.

PrivateGPT comes in two flavours: a chat UI for end users (similar to chat.openai.com) and a headless / API version that allows the functionality to be built into applications and custom UIs.

End-User Chat Interface

The PrivateGPT chat UI consists of a web interface and Private AI's container. The web interface functions similarly to ChatGPT, except with prompts being redacted and completions being re-identified using the Private AI container instance. The UI also uses the Microsoft Azure OpenAI Service instead of OpenAI directly, because the Azure service offers better privacy and security standards. For further details, please see UI Basic Use.

You can try it yourself for free today with a container instance hosted by Private AI at chat.private-ai.com. If you're interested in a deployment inside your own compute systems, please contact our sales team.

Headless/API Interface

info

Whilst PrivateGPT is primarily designed for use with OpenAI's ChatGPT, it also works fine with GPT4 and other providers such as Cohere and Anthropic.

Starting with 3.2.0, PrivateGPT can also be used via an API, which makes POST requests to Private AI's container. It works by placing de-identify and re-identify calls around each LLM call. Please see PrivateGPT Headless Interface for further details.