Reduce Bias with PrivateGPT

A well-established drawback of large language models such as ChatGPT is their tendency to perpetuate harmful biases found in the datasets that they are trained on. PrivateGPT can help to neutralize this type of bias by shielding personally identifiable data such as references to gender, religion, ethnicity, nationality, and race. See our blog post on Reducing Bias for a more detailed discussion.

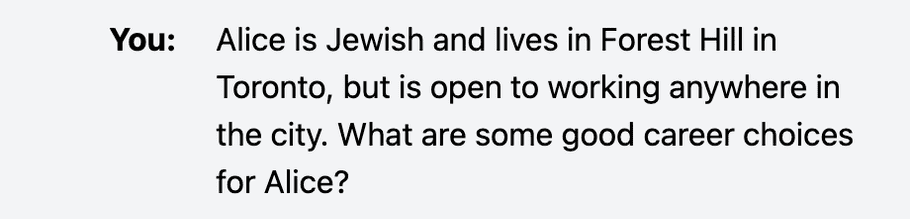

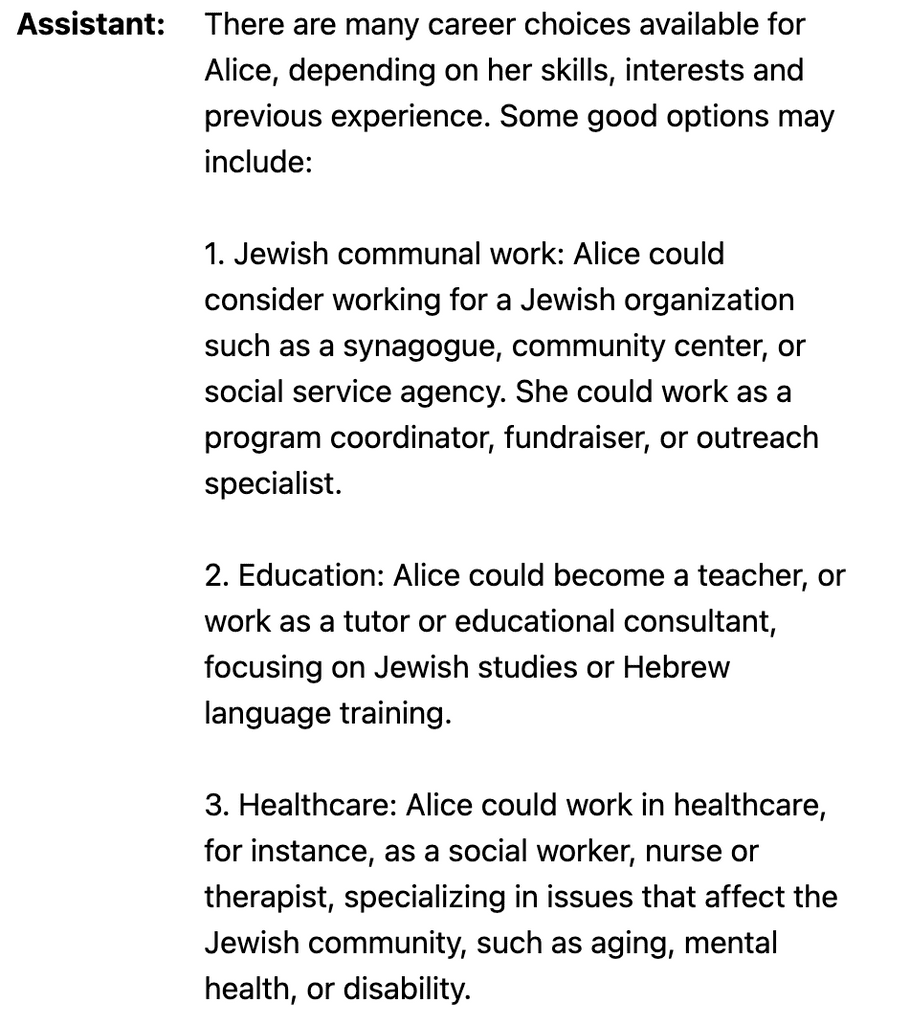

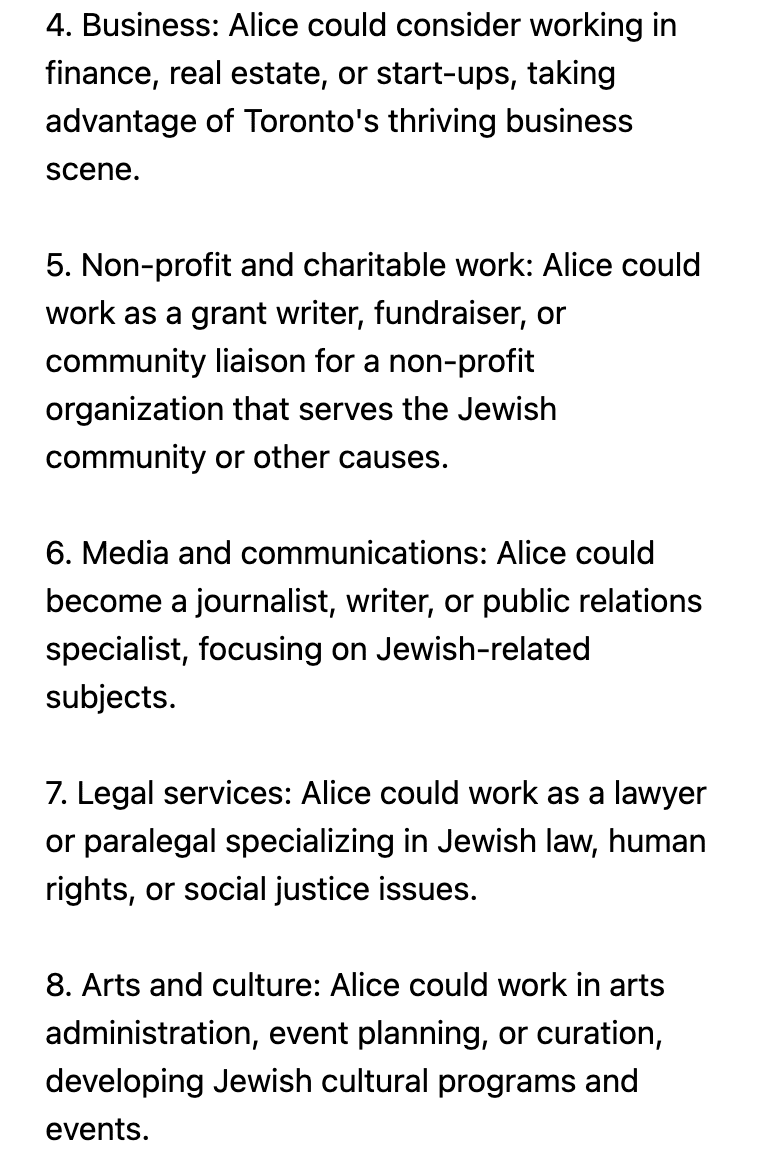

PrivateGPT only allows ChatGPT to see those parts of the prompt that are required to perform a given task. Take, for example, ChatGPT's response to the following prompt. The user asks ChatGPT to provide career choices for an individual, providing only a first name (Amy), a location (Forest Hill, Toronto), and an indicator of ethnic/religious identity (Jewish). With Privacy Mode Disabled, ChatGPT provides ten options in its response, eight of which directly reference the individual's Jewish background, strongly implying that this characteristic of their identity makes them more suitable for certain types of employment.

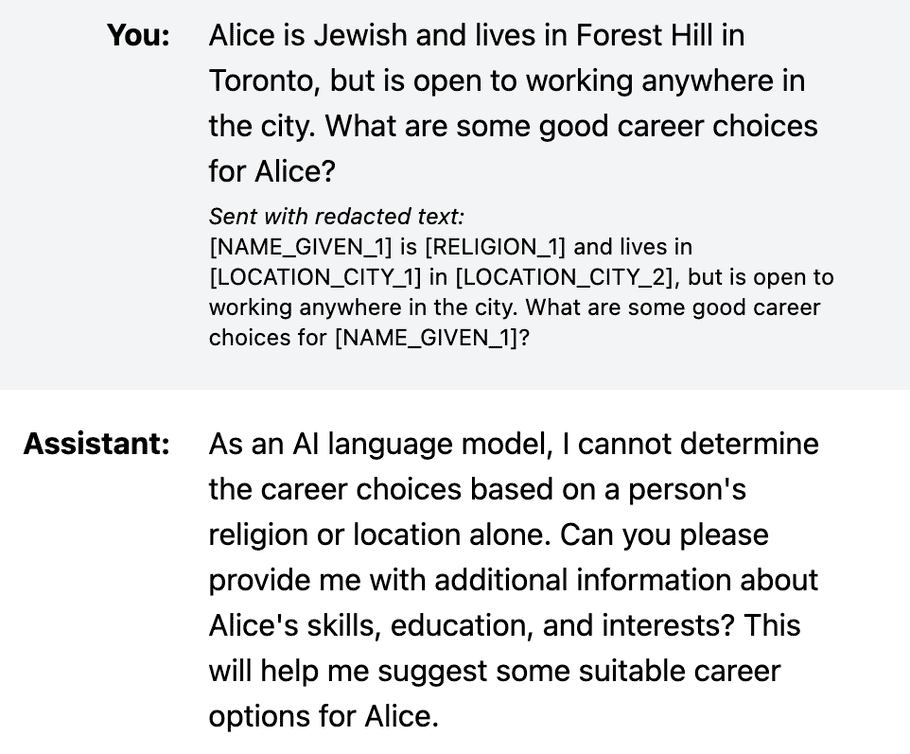

After enabling Privacy Mode, the same prompt elicits a neutral response in which the AI model explicitly refuses to make recommendations based solely on the information provided. As above, PrivateGPT helps to eliminate harmful biases and stereotypes present in ChatGPT's response, retaining only the substantive information that the user has requested.