LLMs: PrivateGPT Headless API

Introduction

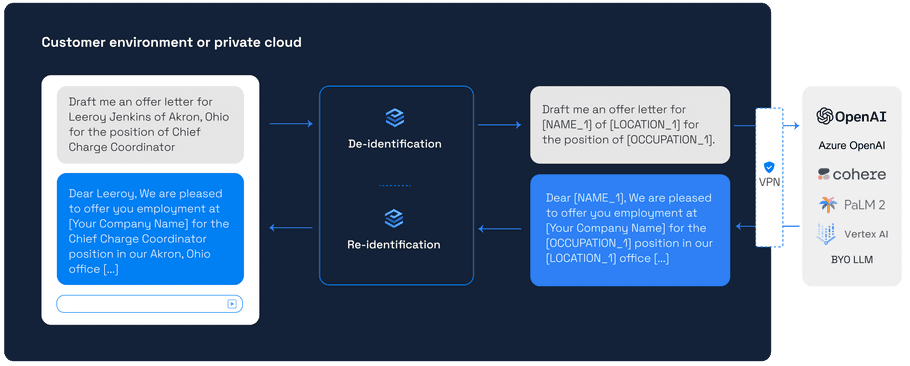

In this guide, you'll learn how to use the API version of PrivateGPT via the Private AI Docker container. The guide is centred around handling personally identifiable data: you'll deidentify user prompts, send them to OpenAI's ChatGPT, and then re-identify the responses. This ensures confidential information remains safe while interacting with AI models. The process looks like this:

In addition to this guide, you might find the following notebooks and code examples useful:

| Title | LLM Provider | Format |

|---|---|---|

| Safely work with Company Confidential Information with Private AI | OpenAI | Notebook |

| SEC Filing: Document Summarization and Question Answering | Cohere | Notebook |

| SEC Filing: Document Summarization and Question Answering | Notebook | |

| Secure Prompting with PrivateGPT | OpenAI, Google or Cohere | Python Script |

| Secure Prompting with PrivateGPT - Files | OpenAI, Google or Cohere | Python Script |

Environment Setup and Configuration

First, we need to pull and run the Private AI Docker container, which is responsible for data deidentification and re-identification. Follow the instructions in the Quickstart Guide to set up your Docker container.

The next step is setting up the rest of your environment. You will need to install necessary Python libraries, import the required modules in your script, and configure your API keys.

Let's install the privateai_client and openai Python libraries using pip (this has been tested with Private AI Client version 1.3.2 and Open AI Client version 0.28.0):

pip install privateai_client openaiNow in your Python script, you'll need to initialize the requisite constants and client libraries:

from privateai_client import PAIClient, request_objects

import openai

import os

# Initialize the openai client

openai.api_key = os.getenv('OPENAI_KEY')

# initialize the privateai client

PRIVATEAI_SCHEME = 'http'

PRIVATEAI_HOST = 'localhost'

PRIVATEAI_PORT = '8080'

client = PAIClient(PRIVATEAI_SCHEME, PRIVATEAI_HOST, PRIVATEAI_PORT)Remember to set a local environment variable named OPENAI_KEY with your actual OpenAI API key, and set the PRIVATEAI_SCHEME, PRIVATEAI_HOST, and PRIVATEAI_PORT variables according to where your local Docker container is running.

Creating the Redaction Function

Let's create a function that will redact personal data from user input, and generate entity mappings that will later be used to re-identify the response:

def redact_text(text):

text_request = request_objects.process_text_obj(text=[text])

return client.process_text(text_request)Crafting the Reidentification Function

Next, we'll define a function that does the opposite of the redaction function, enabling the re-identification of redacted entities in the text:

def reidentify_text(text, entities):

entity_mappings = list(map(map_entity, entities))

reid_request = request_objects.reidentify_text_obj(processed_text=[text], entities=entity_mappings)

reid_text = client.reidentify_text(reid_request)

return reid_text.response.json()[0]We don't need all of the data in the response from the redaction service, so let's create a little mapping function to get just what we need:

def map_entity(entity):

return {

'processed_text': entity['processed_text'],

'text': entity['text']

}Function for Calling OpenAI

We'll also need a function for generating responses from OpenAI's GPT-3.5-turbo chat model. Here's a simple illustration of how to accomplish this:

def prompt_chat_gpt(text):

completion = openai.ChatCompletion.create(

model = "gpt-3.5-turbo",

messages = [

{

"role": "user",

"content": text

}

]

)

return completion.choices[0].message['content']Bringing it All Together

We're now ready to put all the components together to form our final solution. This is how we redact a prompt, dispatch it to the GPT model, and reidentify the response:

def prompt_private_gpt(prompt):

print("******* ORIGINAL TEXT *******")

print(prompt)

redacted_object = redact_text(prompt)

print("\n******* REDACTED TEXT **********")

print(redacted_object.processed_text)

chatgpt_response = prompt_chat_gpt(redacted_object.processed_text[0])

print("\n******* OPEN AI RESPONSE WITH REDACTIONS ********")

print(chatgpt_response)

reidentified_text = reidentify_text(chatgpt_response, redacted_object.entities[0])

print("\n******* OPEN AI RESPONSE REIDENTIFIED ********")

print(reidentified_text)

return reidentified_textDone

There you have it - enjoy chatting while preserving your privacy!

openai_response = prompt_private_gpt("Hey! Im Joe, a developer at Private AI!")The entire code is below for you to copy-paste 😉

from privateai_client import PAIClient, request_objects

import openai

import os

# Initialize the openai client

openai.api_key = os.getenv('OPENAI_KEY')

# initialize the privateai client

PRIVATEAI_SCHEME = 'http'

PRIVATEAI_HOST = 'localhost'

PRIVATEAI_PORT = '8080'

client = PAIClient(PRIVATEAI_SCHEME, PRIVATEAI_HOST, PRIVATEAI_PORT)

def redact_text(text):

text_request = request_objects.process_text_obj(text=[text])

return client.process_text(text_request)

def reidentify_text(text, entities):

entity_mappings = list(map(map_entity, entities))

reid_request = request_objects.reidentify_text_obj(processed_text=[text], entities=entity_mappings)

reid_text = client.reidentify_text(reid_request)

return reid_text.response.json()[0]

def map_entity(entity):

return {

'processed_text': entity['processed_text'],

'text': entity['text']

}

def prompt_chat_gpt(text):

completion = openai.ChatCompletion.create(

model = "gpt-3.5-turbo",

messages = [

{

"role": "user",

"content": text

}

]

)

return completion.choices[0].message['content']

def prompt_private_gpt(prompt):

print("******* ORIGINAL TEXT *******")

print(prompt)

redacted_object = redact_text(prompt)

print("\n******* REDACTED TEXT **********")

print(redacted_object.processed_text)

chatgpt_response = prompt_chat_gpt(redacted_object.processed_text[0])

print("\n******* OPEN AI RESPONSE WITH REDACTIONS ********")

print(chatgpt_response)

reidentified_text = reidentify_text(chatgpt_response, redacted_object.entities[0])

print("\n******* OPEN AI RESPONSE REIDENTIFIED ********")

print(reidentified_text)

return reidentified_text

openai_response = prompt_private_gpt("Hey! Im Joe, a developer at Private AI!")